In late February, U.S. Deputy Chief Technology Officer Lynn Parker traveled to Paris with a mission.

She had come for a meeting with other government officials at the Organization for Economic Cooperation and Development (OECD), and she wanted to spread the word that it was high time for the West to join forces and beat China at writing the global rules for artificial intelligence.

Parkers plea came as a surprise to others. Less than two years earlier, Donald Trumps White House had rejected a similar pitch by France and Canada during a Group of Seven (G7) meeting, arguing that international rules for AI would be premature and hamper innovation.

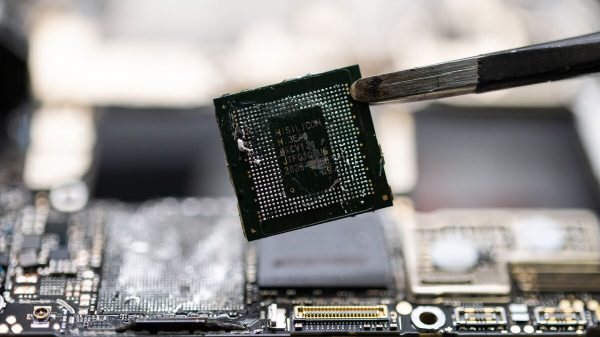

But Chinas blatant ambitions to become the world leader in AI had since alarmed U.S. policymakers and tech executives. Not only was Beijing pouring billions into the research and development of AI, but reports also suggested that the Chinese government was using it to build up an all-seeing surveillance state. And it was becoming increasingly clear that, as the countrys tech giants were exporting their technology around the globe, China was pushing for its ideas to become international standards.

This convinced the White House that, despite differences with its allies over what rules are needed, Western countries should band together to make sure their companies, rather than Beijings, remained at the forefront of developing AI.

Europe and the U.S. agree on what they dont want — a future in which AI is used to build up an Orwellian surveillance apparatus — but theyre far from able to agree what they do want.

“Well never apologize for wanting to have the use of technology in a way that is consistent with our values,” Parker told POLITICO over the phone from Paris. By joining ranks, she added, the West would be able to come up with “a good counter to what China is doing.”

What she didnt say at the time was that the White House was also ready to revisit the Franco-Canadian proposal from 2018.

Four months later, in June 2020, the U.S. signed on to a tweaked version of the pitch, announcing that all G7 members (Canada, France, Germany, Italy, Japan, the U.K. and the U.S.) plus Australia, South Korea, Singapore, Mexico, India, Slovenia and the EU would launch an alliance dubbed the Global Partnership on Artificial Intelligence (GPAI) to support the “responsible and human-centric development and use” of AI.

The idea was to do it “in a manner consistent with human rights, fundamental freedoms and our shared democratic values,” the countries added in a statement.

In other words: a different approach from Chinas.

Trustworthy versus innovation?

As artificial intelligence changes societies around the world, this new GPAI alliance as well as the OECD have emerged as the key arenas for the U.S., Europe and other Western countries to join forces and come up with global standards for AI.

But first, Europe and the U.S. need to see eye to eye, and that isnt a given. They agree on what they dont want — a future in which AI is used to build up an Orwellian surveillance apparatus — but theyre far from able to agree on what they do want.

The EU, home of stringent privacy laws, wants to become a leader in “trustworthy” AI and plans to release the worlds first laws for AI early next year. This, Brussels hopes, will protect Europeans against abuse and boost consumer trust in European AI while giving its industry a competitive advantage in the long run.

The U.S., on the other hand, considers Brussels approach “heavy-handed” and “innovation-killing,” echoing the position of its own tech industry, whose representatives have warned that too many rules would stifle innovation. In feedback to a first draft of Europes upcoming laws for AI, submitted to the EU in May, for instance, industry giant Google warned Brussels that too many new rules would “significantly weaken Europes position vis-a-vis global competitors.”

The job of the new GPAI alliance, which will meet for the first time in December, will be to bring those two positions together.

A platform called RadVid-19 that identifies lung injuries through artificial intelligence is helping Brazilian doctors detect and diagnose coronavirus | Nelson Almeida/AFP via Getty Images

Few details have been shared with the public about the partnership. But a series of conversations with officials and appointed members, conducted on the condition of anonymity because the work is confidential, suggests that it does not intend to work on any legal instruments.

But its role will likely go beyond bringing top-notch AI scientists and policymakers to the same table — and could influence the OECDs work on developing detailed AI principles, which in turn are meant to provide a framework for governments drafting hard laws.

Corporation rules

The work to form a Western alliance on AI comes as the technology is moving from the realm of science-fiction into everyday use. Today, AI is used to treat cancer, predict the outbreak of violent conflicts, analyze satellite data to monitor migration patterns, power military operations and even write news articles.

But it also comes with significant risks, beyond being abused by governments to spy on their citizens: Most of todays cutting-edge AI systems are, for example, prone to discriminate against vulnerable groups and minorities. This has led to text analysis software labeling being Jewish or being gay as negative, British students from poor areas being disadvantaged during exams, or a Black American being arrested for a crime he did not commit.

Against this backdrop, organizations ranging from the Catholic Church to Big Tech corporations have all released nonbinding ethical principles for how to develop and use AI.

While European leaders are eyeing laws as sweeping as the General Data Protection Regulation, the U.S. has been pushing a light-touch approach to regulation within its own borders.

Yet these have not stopped companies and governments from rolling out invasive technology, including in privacy-conscious Europe, where law enforcement is now using facial-recognition technology with minimal public oversight.

What rules do exist have largely been developed by industry players — such as Google, Facebook and Apple in the U.S., or Tencent and Alibaba in China — for themselves.

“The rules for AI are, in effect, being written in code by the biggest tech companies who have the biggest AI research and the biggest pools of data and the biggest connection to a number of people who are the providers of data,” said Mark Surman, the executive director of the Mozilla Foundation.

President of the European Commission Ursula von der Leyen at the AI Xperience Center of the VUB university in Brussels | Pool photo by Stephanie Lecocq via Getty Images

While policymakers have long been told by the industry that AI systems were black boxes whose inner workings were virtually impossible to understand, that argument is now falling on deaf ears.

Joanna Bryson, a computer scientist and professor of ethics and technology at Berlin-based Hertie School, said that governments “are starting to understand that [the tech industry] is not that different from the pharma industry, or finance.”

“When you start to understand that its all software … and someone chooses which algorithms to use, which data to usRead More – Source

[contf] [contfnew]

politico

[contfnewc] [contfnewc]