British students sent a clear message to policymakers last week: “F*ck the algorithm.”

The outcry worked. A week after algorithm-generated grades resulted in almost 40 percent of students getting lower grades than predicted, the government said students would get teacher-predicted grades after all.

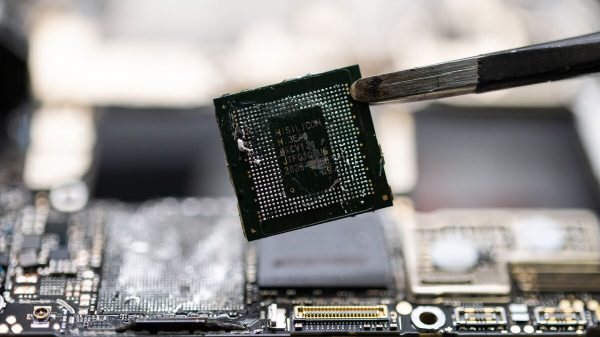

The scandal underlined the creeping influence of algorithms in our lives — and the subsequent pushback.

“From benefits fraud assessments, to visa algorithms, to predictive policing systems, were increasingly moving towards government by algorithm. And the grading fiasco this week in Britain shows that when people realise its there, they dont like it,” said Cori Crider, whose tech justice group Foxglove backed a legal claim against the grading algorithm.

For governments, algorithms promise to make time-consuming and costly decision-making better, more efficient and cheaper. But critics say that automated systems are being rolled out with little transparency or public debate, and risk exacerbating existing inequalities.

Demand for greater transparency is further complicated by the owners of algorithms who argue they are trade secrets whose inner workings cannot be made public.

“The algorithm takes the biases and prejudices of the real world and bakes them in, and gives them a veneer that makes it seem like a policy choice is actually neutral and technical. But it isnt,” said Crider.

The EUs General Data Protection Regulation (GDPR) gives people the right to an explanation of a decision based on automated means, and people can object to the decision if it is based solely on an algorithm. But the grading scandal revealed the GDPRs shortcomings in dealing with algorithms.

Regulators said the grading algorithm had not relied solely on automated means — it said humans were involved in the process — implying that any objection to the decision on these grounds wouldnt stand.

Demand for greater transparency is further complicated by the owners of algorithms who argue they are trade secrets whose inner workings cannot be made public.

British computer scientist and privacy campaigner Michael Veale is also skeptical of the power of regulation like the GDPR to act as a bulwark against algorithms.

The right to an explanation of an algorithm-generated decision does little to fix “systemic injustices” built into algorithms like the one used to grade British students, he said.

“Getting an explanation but no democratic say in how systems work is like getting a privacy policy without a do not consent button,” he said.

Here are some of the ways in which algorithms are running our lives.

1. Getting access to welfare

Deciding who gets access to welfare and how much is a costly business for governments, and so has become an area that has seen a boom in the use of algorithms. The Swedish city of Trelleborg has automated parts of its social benefits program so that new applications are combined with other database to come to a decision. Denmarks been getting on the welfare algorithm bandwagon too, building a “surveillance behemoth” in the process, activists say. In England, local authorities began using algorithms to determine how much money to spend on each person nearly 10 years ago. In one high profile example, a registered blind wheelchair user had his allowance cut by £10,000 after his local authority began using the system.

But activists started fighting back. In April, Dutch activists got a system used by government to detect welfare fraud banned after a court found it violated privacy.

2. Getting across a border

Hungary, Greece and Latvia have trialled a system called iBorderCtrl that screens non-EU nationals at EU borders, using automated interviews with a virtual border guard, based on “deception detection technology.” The system is in effect an automated lie-detector test taken before the person arrives at the border. In Slovenia, a border police system automatically matches travellers to “other police data” such as criminal files.

Here again, there is pushback. Watchdogs have filed complaints against the Slovenian system, while the U.K. government said earlier this year it would scrap an algorithm used to used to screen visa applicants following a complaint. The groups behind the complaint argued the algorithm was racist, and favored applications from countries with a predominantly white population.

3. Getting arrested

Using algorithms to model when and where crime will happen — an area known as predictive policing — is on the rise. In 2019, British civil rights group Liberty warned that the growth in the use of computer programs to predict crime hotspots and people who are likely to reoffend risks embedding racial profiling into the justice system. In 20Read More – Source

[contf] [contfnew]

politico

[contfnewc] [contfnewc]