Europe needs rules to make sure artificial intelligence wont be used to build up a China-style high-tech surveillance state, the European Unions top AI experts warn.

An expert panel is set to present to the blocs leaders a list of 33 recommendations on how to move forward on AI governance Wednesday, including a stark warning against the use of AI to control and monitor citizens.

In a 48-page final draft of the document, obtained by POLITICO, the experts urge policymakers to define “red lines” for high-risk AI applications — such as systems to mass monitor individuals or rank them according to their behavior — and discuss outlawing some controversial technology.

“Ban AI-enabled mass-scale scoring of individuals,” the expert group demands, adding that there needs to be “very clear and strict rules for surveillance for national security purposes and other purposes claimed to be in the public or national interest.”

Even within the EU, where citizens are protected by strict privacy laws, governments from London to Berlin have been dabbling in facial-recognition technology

But the EUs specialists also advise against imposing one-size-fits-all rules on low-risk AI applications, many of which are still in an early stage of development.

In terms of broader rules for artificial intelligence, the EU should think twice about drafting new legislation, the experts write, and first review existing regulation to make it fit for AI.

“Unnecessarily prescriptive regulation should be avoided,” the group writes. “Not all risks are equal,” they add, and lawmakers should focus on regulating those areas that pose the greatest risk.

The recommendations mark the EUs first shot at defining the conditions under which AI should be developed and deployed in its vast internal market. To date, theyre the most detailed pitch on how Europe could catch up with the global frontrunners U.S. and China in an ongoing race for AI supremacy.

Although non-binding, theyre likely to influence policy decisions made by the next European Commission, which is set to take office in November and is expected to make AI a priority early on in its mandate.

Surveillance apparatus

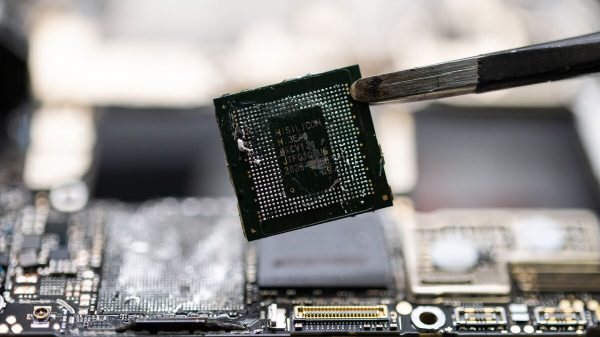

Artificial intelligence technology, allowing machines to do tasks that previously required human thinking, is about to revolutionize the way people live, work or go to war, and it offers vast opportunities from better treating cancer patients to making global supply chains more energy-efficient.

But it also comes with significant risks. The emerging technologies can, for example, be abused by authoritarian regimes to set up a ubiquitous surveillance apparatus.

Most prominently, China has been using cutting-edge AI to build up a high-tech surveillance system and crack down on political dissent, according to media reports — and other countries around the world, including European nations such as Serbia, have struck deals with Chinese companies to introduce their own systems aimed at monitoring individuals.

Even within the European Union, where citizens are protected by strict privacy laws, governments from London to Berlin have been dabbling in facial-recognition technology.

“We need [regulation], Im convinced of that,” German Chancellor Angela Merkel said during a tech conference in Dresden last week, adding that “much of that should be European regulation.”

Experts warn that the risk posed by artificial intelligence goes beyond being abused by governments to spy on their citizens

In what seems like a reference to Beijings surveillance apparatus but without naming China, the EUs AI experts write in their recommendations that “individuals should not be subject to unjustified … identification, profiling and nudging through AI powered methods of biometric recognition.”

They pitch introducing a mandatory screening process for certain high-risk AI applications developed by the private sector and say that civil society should be involved in defining “red lines” for which applications should be banned.

Guiding principles vs hard rules

Experts warn that the risk posed by artificial intelligence goes beyond being abused by governments to spy on their citizens: Most of todays cutting-edge AI systems, for instance, work by finding correlations in huge masses of data and are prone to discriminate against minorities.

This has led to AI-powered recruiting tools discriminating against women, or text analysis software labeling being Jewish or being gay as negative.

Against this backdrop, a debate between policymakers, researchers and the private sector is raging on whether hard rules are necessary to regulate day-to-day AI applications, and who should be in charge of writing them.

“We have up to now a kind of light-touch approach: We dont want to kill innovation by regulation,” Justice Commissioner Vĕra Jourová said at an event in June.

“Trustworthy artificial Read More – Source

[contf] [contfnew]