As the industry continues to grapple with the Meltdown and Spectre attacks, operating system and browser developers in particular are continuing to develop and test schemes to protect against the problems. Simultaneously, microcode updates to alter processor behavior are also starting to ship.

Since news of these attacks first broke, it has been clear that resolving them is going to have some performance impact. Meltdown was presumed to have a substantial impact, at least for some workloads, but Spectre was more of an unknown due to its greater complexity. With patches and microcode now available (at least for some systems), that impact is now starting to become clearer. The situation is, as we should expect with these twin attacks, complex.

To recap: modern high-performance processors perform what is called speculative execution. They will make assumptions about which way branches in the code are taken and speculatively compute results accordingly. If they guess correctly, they win some extra performance; if they guess wrong, they throw away their speculatively calculated results. This is meant to be transparent to programs, but it turns out that this speculation slightly changes the state of the processor. These small changes can be measured, disclosing information about the data and instructions that were used speculatively.

With the Spectre attack, this information can be used to, for example, leak information within a browser (such as saved passwords or cookies) to a malicious JavaScript. With Meltdown, an attack that builds on the same principles, this information can leak data within the kernel memory.

Meltdown applies to Intel's x86 and Apple's ARM processors; it will also apply to ARM processors built on the new A75 design. Meltdown is fixed by changing how operating systems handle memory. Operating systems use structures called page tables to map between process or kernel memory and the underlying physical memory. Traditionally, the accessible memory given to each process is split in half; the bottom half, with a per-process page table, belongs to the process. The top half belongs to the kernel. This kernel half is shared between every process, using just one set of page table entries for every process. This design is both efficient—the processor has a special cache for page table entries—and convenient, as it makes communication between the kernel and process straightforward.

The fix for Meltdown is to split this shared address space. That way when user programs are running, the kernel half has an empty page table rather than the regular kernel page table. This makes it impossible for programs to speculatively use kernel addresses.

Spectre is believed to apply to every high-performance processor that has been sold for the last decade. Two versions have been shown. One version allows an attacker to "train" the processor's branch prediction machinery so that a victim process mispredicts and speculatively executes code of an attacker's choosing (with measurable side-effects); the other tricks the processor into making speculative accesses outside the bounds of an array. The array version operates within a single process; the branch prediction version allows a user process to "steer" the kernel's predicted branches, or one hyperthread to steer its sibling hyperthread, or a guest operating system to steer its hypervisor.

We have written previously about the responses from the industry. By now, Meltdown has been patched in Windows, Linux, macOS, and at least some BSD variants. Spectre is more complicated; at-risk applications (notably, browsers) are being updated to include certain Spectre mitigating techniques to guard against the array bounds variant. Operating system and processor updates are needed to address the branch prediction version. The branch prediction version of Spectre requires both operating system and processor microcode updates. While AMD initially downplayed the significance of this attack, the company has since published a microcode update to give operating systems the control they need.

These different mitigation techniques all come with a performance cost. Speculative execution is used to make the processor run our programs faster, and branch predictors are used to make that speculation adaptive to the specific programs and data that we're using. The countermeasures all make that speculation somewhat less powerful. The big question is, how much?

Meltdown

When news of the Meltdown attack leaked, estimates were that the performance hit could be 30 percent, or even more, based on certain synthetic benchmarking. For most of us, it looks like the hit won't be anything like that severe. But it will have a strong dependence on what kind of processor is being used and what you're doing with it.

The good news, such as it is, is that if you're using a modern processor—Skylake, Kaby Lake, or Coffee Lake—then in normal desktop workloads, the performance hit is negligible, a few percentage points at most. This is Microsoft's result in Windows 10; it has also been independently tested on Windows 10, and there are similar results for macOS.

Of course, there are wrinkles. Microsoft says that Windows 7 and 8 are generally going to see a higher performance impact than Windows 10. Windows 10 moves some things, such as parsing fonts, out of the kernel and into regular processes. So even before Meltdown, Windows 10 was incurring a page table switch whenever it had to load a new font. For Windows 7 and 8, that overhead is now new.

The overhead of a few percent assumes that workloads are standard desktop workloads; browsers, games, productivity applications, and so on. These workloads don't actually call into the kernel very often, spending most of their time in the application itself (or idle, waiting for the person at the keyboard to actually do something). Tasks that use the disk or network a lot will see rather more overhead. This is very visible in TechSpot's benchmarks. Compute-intensive workloads such as Geekbench and Cinebench show no meaningful change at all. Nor do a wide range of games.

But fire up a disk benchmark and the story is rather different. Both CrystalDiskMark and ATTO Disk Benchmark show some significant performance drop-offs under high levels of disk activity, with data transfer rates declining by as much as 30 percent. That's because these benchmarks do virtually nothing other than issue back-to-back calls into the kernel.

Phoronix found similar results in Linux: around a 12-percent drop in an I/O intensive benchmark such as the PostgreSQL database's pgbench but negligible differences in compute-intensive workloads such as video encoding or software compilation.

A similar story would be expected from benchmarks that are network intensive.

Why does the workload matter?

The special cache used for page table entries, called the translation lookaside buffer (TLB), is an important and limited resource that contains mappings from virtual addresses to physical memory addresses. Traditionally, the TLB gets flushed—emptied out—every time the operating system switches to a different set of page tables. This is why the split address was so useful; switching from a user process to the kernel could be done without having to switch to a different set of page tables (because the top half of each user process is the shared kernel page table). Only switching from one user process to a different user process requires a change of page tables (to switch the bottom half from one process to the next).

The dual page table solution to Meltdown increases the number of switches, forcing the TLB to be flushed not just when switching from one user process to the next, but also when one user process calls into the kernel. Before dual page tables, a user process that called into the kernel and then received a response wouldn't need to flush the TLB at all, as the entire operation could use the same page table. Now, there's one page table switch on the way into the kernel, and a second, back to the process' page table, on the way out. This is why I/O intensive workloads are penalized so heavily: these workloads switch from the benchmark process into the kernel and then back into the benchmark process over and over again, incurring two TLB flushes for each roundtrip.

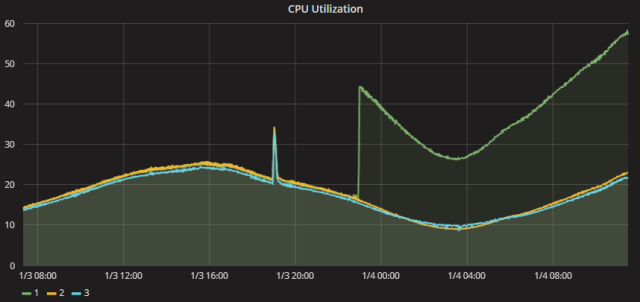

This is why Epic has posted about significant increases in server CPU load since enabling the Meltdown protection. A game server will typically run as on a dedicated machine, as the sole running process, but it will perform lots of network I/O. This means that it's going from "hardly ever has to flush the TLB" to "having to flush the TLB thousands of times a second."

The situation for old processors is even worse. The growth of virtualization has put the TLB under more pressure than ever before, because with virtualization, the processor has to switch between kernels too, forcing extra TLB flushes. To reduce that overhead, a feature called Process Context ID (PCID) was introduced by Intel's Westmere architecture, and a related instruction, INVPCID (invalidate PCID) with Haswell. With PCID enabled, the way the TLB is used and flushed changes. First, the TLB tags each entry with the PCID of the process that owns the entry. This allows two different mappings from the same virtual address to be stored in the TLB as long as they have a different PCID. Second, with PCID enabled, switching from one set of page tables to another doesn't flush the TLB any more. Since each process can only use TLB entries that have the right PCID, there's no need to flush the TLB each time.

While this seems obviously useful, especially for virtualization—for example, it might be possible to give each virtual machine its own PCID to cut out the flushing when switching between VMs—no major operating system bothered to add support for PCID. PCID was awkward and complex to use, so perhaps operating system developers never felt it was worthwhile. Haswell, with INVPCID, made using PCIDs a bit simpler by providing an instruction to explicitly force processors to discard TLB entries belonging to a particular PCID, but still there was zero uptake among mainstream operating systems.

That's until Meltdown. The Meltdown dual page tables require processors to perform more TLB flushing, sometimes a lot more. PCID is purpose-built to enable switching to a different set of page tables without having to wipe out the TLB. And since Meltdown needed patching, those Windows and Linux developers were finally given a good reason to use PCID and INVPCID.

As such, Windows will use PCID if the hardware supports INVPCID—that means Haswell or newer. If the hardware doesn't support INVPCID, then Windows won't fall back to using plain PCID; it just won't use the feature at all. In Linux, initial efforts were made to support PCID and INVPCID. The PCID-only changes were then removed due to their complexity and awkwardness.

This makes a difference. In a synthetic benchmark that tests only the cost of switching into the kernel and back again, an unpatched Linux system can switch about 5.2 million times a second. Dual page tables slashes that to 2.2 million a second; dual page tables with PCID gets it back up to 3 million.

Those overheads of sub-1 percent for typical desktop workloads were using a machine with PCID and INVPCID support. Without that support, Microsoft writes that in Windows 10 "some users will notice a decrease in system performance" and, in Windows 7 and 8, "most users" will notice a performance decrease.

[contf] [contfnew]

Ars Technica

[contfnewc] [contfnewc]